Okechukwu Nwaozor had to pardon my chuckle when he told me he wanted to build a rival to ChatGPT. The disbelief was natural: he is a first-time founder, fresh out of secondary school, just seventeen, and a completely self-taught developer.

I chuckled again when he mentioned he’d raised ₦2.7 million — pocket change compared to OpenAI, the $500 billion company with over $40 billion in funding behind the model he’s trying to compete with.

But on a call with Techpoint Africa, Nwaozor made it clear he understood how unbelievable his ambitions sounded.

He’s been laughed at before. When he first announced on Facebook that he was building his own AI model, the comments ranged from disbelief to outright mockery. “People told me it couldn’t be done,” he recalls.

The doubt didn’t stop him. As unbelievable as it seems for a 17-year-old Nigerian to challenge a Silicon Valley giant, Nwaozor simply kept building. And he didn’t just build an idea, he built a working product. OkeyMeta’s model can already be tested publicly, and it performs well enough that I initially doubted it was truly trained from scratch.

When I asked OkeyMeta’s chatbot — OkeyAI — whether it was really an original model, it responded confidently:

“I understand why you might think that, given the similarity in some functionalities. But I am OkeyAI 4.0 DeepCognition, created by OkeyMeta Ltd, right here in Nigeria.

“It even credited its team: “Developed by Okechukwu Goodnews Nwaozor — the first ML engineer at OkeyMeta — with support from Precious Obiesie (Co-founder), Raji Abdulazeem Adeyemi (Lead Data Analyst), Shuaib Ali Abiodun (Head of Marketing), and Woleola Abdullateef (Product Designer).”

The story matched everything Nwaozor told me. But it left one question: how does a 17-year-old decide he’s going to build a large language model from scratch?

The inspiration for OkeyMeta

You would expect Nwaozor’s motivation to come from the global frenzy around ChatGPT, but that wasn’t the spark. His fascination began much earlier with Google.

“I kept wondering how Google instantly gives you so many relevant results,” he says. That curiosity pulled him down a rabbit hole, one that led him to AI long before he ever imagined building his own model.

In a Facebook developer group, he saw people creating AI bots that could join conversations and respond like real humans. “It blew my mind,” he recalls.

That curiosity hardened into ambition in 2022. “I started by gathering data, which I used to train version one,” he said. “Later, I figured out how to get free data from open-source sites, and that helped me build version 2.0-basic.”

Like Chimdi of ChatATP, Nwaozor is a die-hard Mark Zuckerberg fan. So naturally, one of the first things he tried to build was a social media platform. Like every self-taught developer, he dabbled in many small projects, but OkeyMeta became the one he couldn’t let go of his holy grail.

He eventually registered the company as OkeyMeta — a blend of his name and Zuckerberg’s Meta.

“To me, it means Okechukwu, go beyond the current state because ‘meta’ in tech means to transcend,” he explains.

And he has been trying to transcend for three years now. Which means that at just 14 years old, he had already chosen this path and began pursuing it relentlessly. Despite his age, he pulled together a small team of undergraduates who helped him push the model to where it is today.

But belief was hard to come by. “People kept doubting me,” he said. “They thought I’d built another ChatGPT wrapper, and I needed to change that perspective.”

Building an LLM from scratch

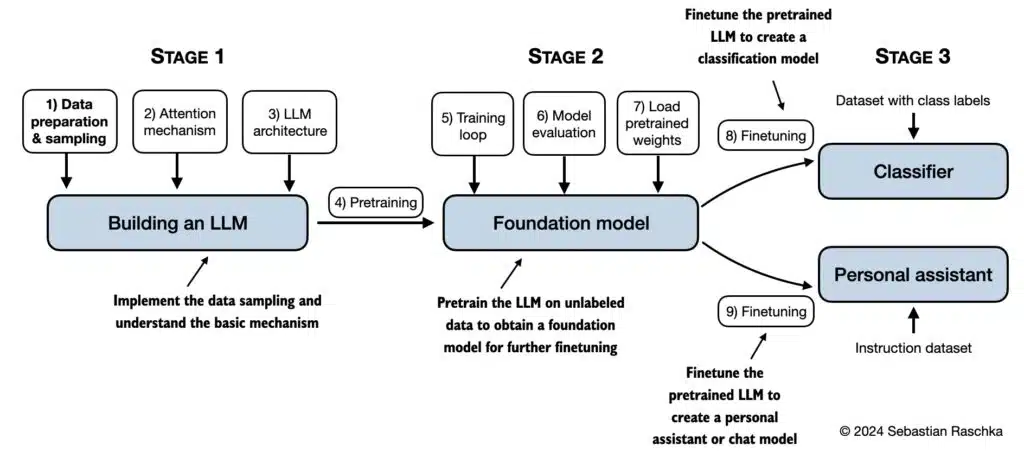

When Saheed Azeez built NaijaWeb in 2024, he gave a glimpse into what it takes to train an AI model from scratch. It involved collecting datasets, cleaning them, preprocessing them, and then training a model long enough for it to learn meaningful patterns.

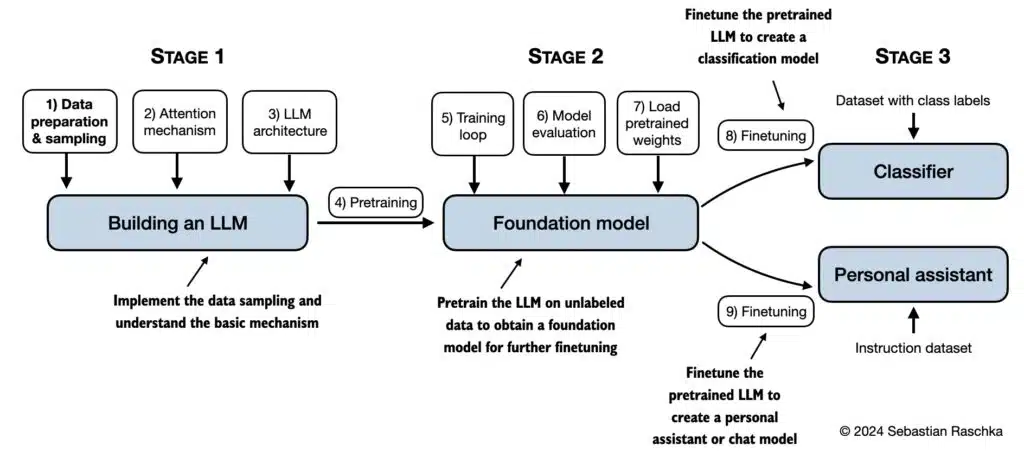

Even that process is difficult. But building a large language model (LLM) is an entirely different level of complexity.

Big AI labs such as OpenAI, Anthropic, and Google train their models on trillions of words, using thousands of GPUs, and budgets that stretch into hundreds of millions of dollars.

But things have changed. Thanks to open-source datasets, public research, and access to rentable cloud GPUs, a determined developer can now build smaller, specialised LLMs without Silicon Valley-level resources.

Ten years ago, this would have been impossible. Today, developers can rent compute from Google Cloud, which is exactly what Nwaozor is doing

Building a frontier-scale model comparable to GPT-3 or GPT-4 would require compute and funding far beyond his ₦2.7 million.

Nwaozor gathered African-focused datasets, cleaned and prepared them himself, trained multiple versions of the model from scratch, built his own training pipelines, and created an interface and API that developers can use.

He even went as far as designing his own Transformer architecture — the Situated Symbolic Artificial Intelligence Language Model (SSAILM) — which he believes makes OkeyMeta the first truly African-origin LLM.

He contrasted this with the competitors he studied. According to him, Awarri’s N-Atlas model — another Nigerian LLM project — wasn’t created from scratch.“We discovered that N-Atlas was built on Meta’s Llama 3,” he says.

For Nwaozor, this distinction matters. He wants people to understand the difference between fine-tuning an existing model and building a foundation model from the ground up, even if his own model is much smaller than global giants.

The future of OkeyMeta

Taking on OpenAI may sound delusional, but that delusion is working. OkeyAI now has nearly 1,000 users, while the OkeyMeta API platform has attracted 8,000 developers, with about half of them actively building on it.

To Nwaozor, however, this is slow progress.“We’ve not really gotten the kind of publicity we want,” he said quietly. Despite three years of work and an ambitious vision, visibility remains painfully low. “Our posts only get one or two likes,” he almost whispered. This is why he’s focused on growing user numbers rather than making money.

“All the developers are using it for free. It is important for us to create awareness first,” he explained. Free access is part of his strategy to drive adoption, but he also believes the platform already has features that give it an edge over big players.

For example, OkeyMeta doesn’t cap memory the way models like ChatGPT do.

“ChatGPT and other AI chatbots cap memory at 1,000 tokens — ours is unlimited. We’ve also seen less hallucination compared to others like Gemini,” he claims.

The team has also built clever agent-based features that allow the model to take actions only when specific conditions are met. For example, replying to an email only after the sender expresses interest in buying a product.

But growth is a double-edged sword.

OkeyMeta currently rents GPUs from Google at $100 per month, and more users means higher compute costs, which the team simply cannot afford right now.

Nwaozor knows his ₦2.7 million in funding will soon run out. He has received some investor interest, but not enough and some of it, he said, came with terms that would harm him long-term. Raising the right kind of capital is now a matter of survival.

Still, his conviction remains unshaken. He imagines a future where OkeyMeta becomes a global AI company, and where the people who laughed at him on Facebook eventually understand what he was trying to do.

OkeyMeta is tiny compared to the giants he hopes to challenge, but it represents something of an early, homegrown attempt at foundational AI coming from a teenager in Nigeria.

To go further, he will need more than passion and code. He will need capital, mentorship, infrastructure, and the kind of guidance required to run a company at the scale he dreams about.